HWS News

15 May 2025 • Research A Bat-Like Robot Design Using Echolocation Could Help Find Disaster Survivors

Faculty and student research in AI and robotics merge to create more effective and cost-efficient methods for search and rescue missions.

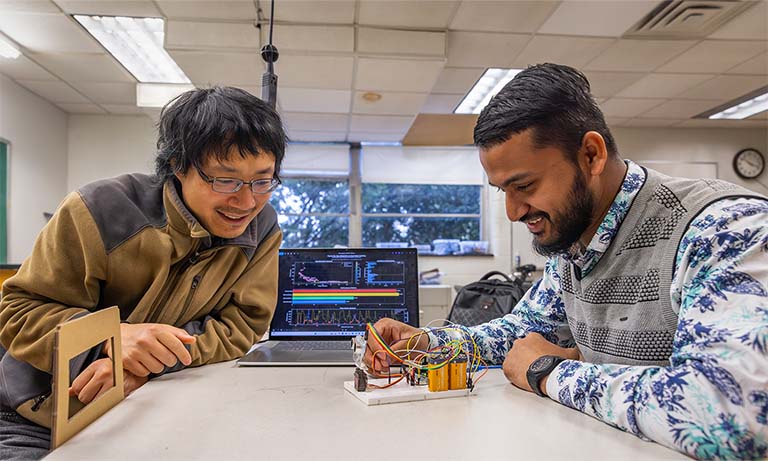

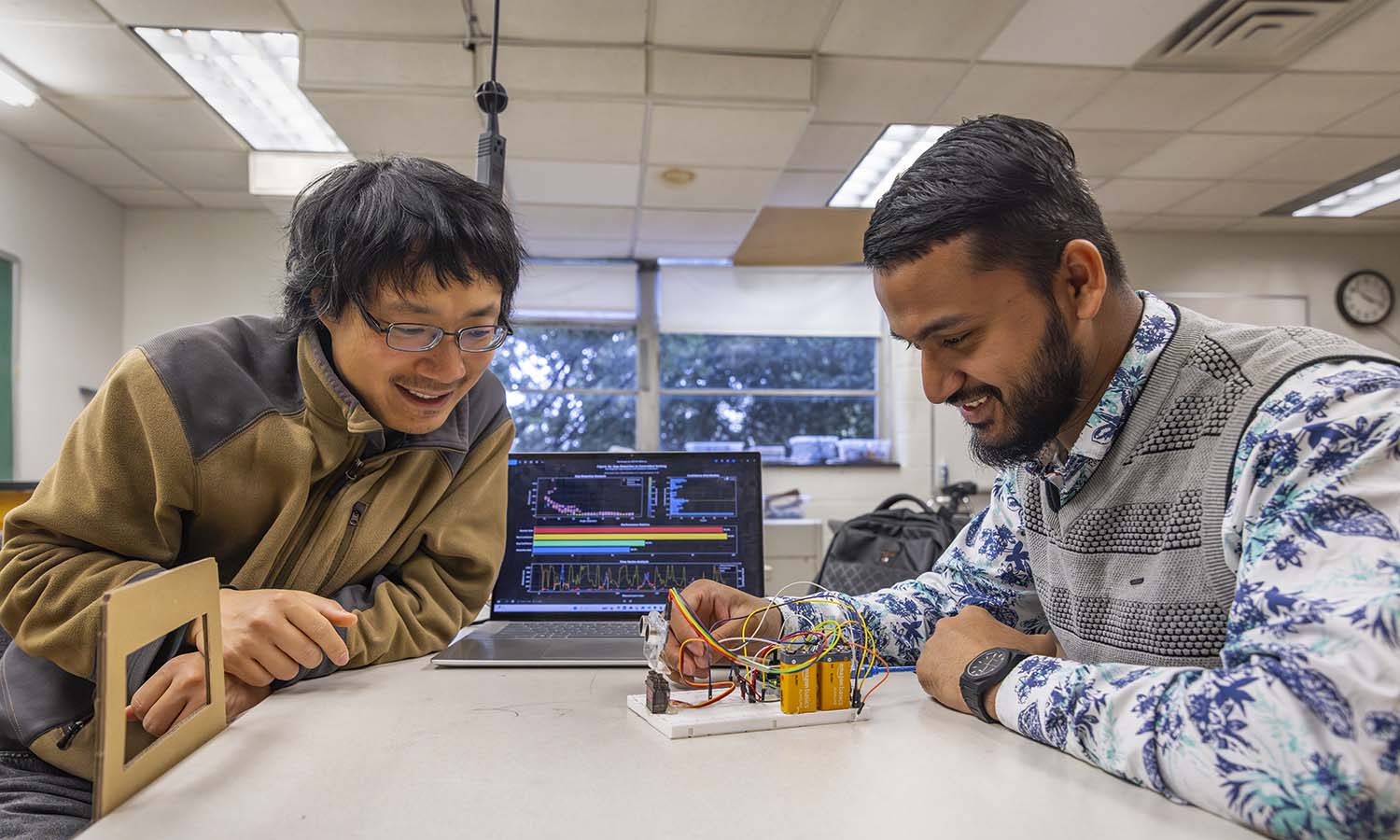

Khairul Islam ’25 overcame his aversion to artificial intelligence thanks to Assistant Professor of Mathematics and Computer Science Hanqing Hu.

Hu’s class, “Topics in Computer Science” Islam says, helped shift his mindset.

“That was a class that literally clicked so many things in my mind,” Islam recalls. He was struggling with what seemed like an unsolvable problem when Hu helped him from 6 p.m. to 9 p.m. over Zoom. “I’ll never forget that. We solved my whole problem online,” Islam says.

Hu asks, “I did that?”

“Yes! It was the first class I had with you,” Islam replies.

It was the beginning of a partnership that merged Islam’s passion for robotics and Hu’s expertise in AI to create the basis for a potentially life-saving technology. The sensor-equipped robot is designed to find spaces to navigate through in dangerous environments.

“We are thinking about things like rescue robots where the environment is very complex. There could be a forest fire where you have a drone search for people, so it has to go through branches,” Hu says. “The majority of the research now is focused on evading objects. If they detect an object in the front, they go around it.”

In many cases, however, there is no way to get around an obstacle. Islam and Hu wanted to create a method for their robot to find gaps it could squeeze through to accomplish its missions.

Currently, there already exists technology to map out environments in copious detail. Gaps can then be easily found, but it’s expensive. A typical light-based sensor can cost between $200 to $500 and provide for more information than necessary for the job. Islam and Hu’s sensor costs only 47 cents.

That low cost is especially helpful in high-risk environments where the drones are likely to get damaged in operation. By using machine learning, Islam and Hu’s sensor, while less accurate than costlier sensors, can identify patterns in its measurements that indicate gaps in the surroundings.

In smoky environments, laser based sensors are inadequate, Hu explains. “Those are based on light, but the smoke will block the laser,” Hu says. “But our method can be used in smoke because it uses sound instead.”

Echolocation is by no means new to drone technology but using it to locate gaps in the environment is. The robot’s sensor rotates constantly performing distance measurements. With real-time processing, machine learning and visualization components, the system combines four layers of technology to detect gaps.

The result is 95 percent accuracy, Islam says, versus a 70-80 percent accuracy for the same sensor without machine learning.

Islam presented their paper, which included work by Anik Biswas ’28, in April via Zoom at the Association for Computing Machinery’s 2025 International Conference on Multimodal Interaction. Researching this project, Islam says, was intense but it didn’t deter him. On the contrary.

“For me, academia is research, and research is self-learning. When you're just studying for a class, you might memorize just to get by,” he says. “But in research, you feel the need to truly understand. It's beautiful!”

Originally from Dhaka, Bangladesh, Islam says he will be the first person in his extended family with an undergraduate degree. “That's why this journey matters so much to me,” Islam says.

“I started doing research back home just for fun. Then at Hobart and William Smith, my professors noticed my work, I got course credit during semesters and even got paid to conduct research during summers,” Islam says. “My hobby became my academic path! I've spent countless weekends and breaks in Eaton 116, and honestly, I'm one of the happiest people because of it.”

Islam plans to attend graduate school in the fall. He plans to develop robotic arms using AI, robotics, and machine vision to detect and remove weeds in agricultural fields without damaging crops. By removing weeds mechanically, the system allows growers to forgo environmentally harmful herbicides.